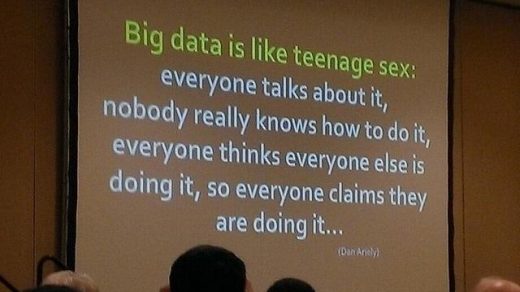

One of the key questions in AI ethics is whether neutrality should be objective and when. Neutrality means that AI systems do not favor or discriminate any group, individual, or value over another. Neutrality (don’t confuse it with Net Neutrality) can be seen as a desirable goal for AI ethics, as it can promote fairness, justice, and impartiality. However, neutrality can also be seen as an impossible or undesirable goal for AI ethics, as it can ignore the complexity, diversity, and contextuality of human values and situations.

In my article in the Open Ethics blog, I suggest that neutrality is not always applicable and should be used with caution. I argue about how neutrality harms AI ethics and provide a critical analysis of the pros and cons of neutrality in AI systems. Here are several elements of the summary:

- Systems as such do not favor or discriminate any group, individual, or value over another. Discrimination happens due to human decisions or implicit bias in the training data.

- Neutrality can be seen as a desirable goal for AI ethics, as it can promote fairness, justice, and impartiality, respect human rights and values, and foster trust and accountability but it’s not the case.

- Moreover, neutrality can be sometimes an impossible or undesirable goal for AI ethics, as it can ignore the complexity, diversity, and contextuality of human values and situations, and create ethical dilemmas and trade-offs that wouldn’t otherwise exist.

- Neutrality is not always applicable and should be used with caution. AI ethics should consider the perspectives, interests, and values of different stakeholders and contexts.

- The article provides some examples of how neutrality harms AI ethics, such as the lack of diversity and inclusion in AI development, the difficulty of defining and measuring neutrality, and the risk of reinforcing existing biases and inequalities